17758013020 Chen Chen

-

-

-

17816169069 Jinglin Jian

-

17758013020 Chen Chen

17816169069 Jinglin Jian

Dr. Wenbo Ding is now an associate professor and leading the smart sensing and robotics (SSR) group in Tsinghua-Berkeley Shenzhen Institute (TBSI), Tsinghua University. He received the B.E and Ph.D. degrees (both with the highest honors) from the Department of Electronic Engineering, Tsinghua University, Beijing, China, in 2011 and 2016, respectively, and then worked as a postdoctoral research fellow in Materials Science and Engineering at Georgia Tech, Atlanta, GA, under the supervision of Professor Z. L. Wang from 2016 to 2019. He has published over 70 journal and conference papers and received many prestigious awards, including the National Early-Career Award, the IEEE Scott Helt Memorial Award for the best paper published in IEEE Transactions on Broadcasting, the 2019 and 2022 Natural Science Award (Second Prize) from Institute of Electronics, the Gold Medal and Special Prize at the 47th International Exhibition of Inventions of Geneva. His research interests are diverse and interdisciplinary, which include self-powered sensors, energy harvesting, wearable devices for health and soft robotics with the help of signal processing, machine learning and mobile computing.

Flexible Visuo-tactile Sensing for Object Recognition and Grasping

Shoujie Li, Wenbo Ding

Tsinghua-Berkeley Shenzhen Institute, Shenzhen International Graduate School, Tsinghua University, Shenzhen, China (lsj20@mails.tsinghua.edu.cn, ding.wenbo@sz.tsinghua.edu.cn)

Abstract

The grasping of transparent objects is challenging but of significance to robots. In this article, a visual-tactile fusion framework for transparent object grasping in complex backgrounds is proposed, which synergizes the advantages of vision and touch, and greatly improves the grasping efficiency of transparent objects. First, we propose a multi-scene synthetic grasping dataset named SimTrans12K together with a Gaussian-Mask annotation method. Next, based on the TaTa gripper, we propose a grasping network named transparent object grasping convolutional neural network (TGCNN) for grasping position detection, which shows good performance in both synthetic and real scenes. Inspired by human grasping, a tactile calibration method and a visual-tactile fusion classification method are designed, which improve the grasping success rate by 36.7% compared to direct grasping and the classification accuracy by 39.1%. Furthermore, the Tactile Height Sensing (THS) module and the Tactile Position Exploration (TPE) module are added to solve the problem of grasping transparent objects in irregular and visually undetectable scenes. Experimental results demonstrate the validity of the framework.

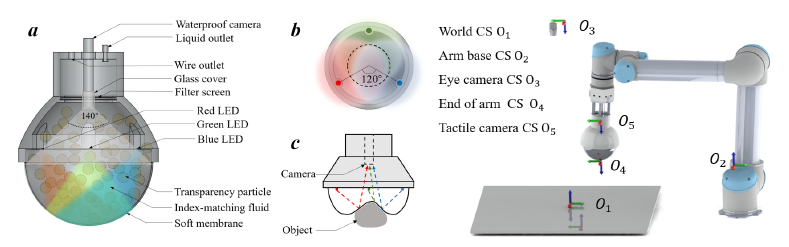

Figure 1: The structure of TaTa gripper.

References

[1] Shoujie Li, Haixin Yu, Wenbo Ding, Houde Liu, Linqi Ye, Chongkun Xia, Xueqian Wang, Xiao-Ping Zhang. “Visual-tactile Fusion for Transparent Object Grasping in Complex Backgrounds,” IEEE Transactions on Robotics, 2023.